We continue to bring you insights on how to improve your output delivery. The current series is about hardware and software setup options and how actuaries (in general, though the term applies to pretty much any professional) use them. [Here’s the overview.]

TL; DR of the last article: Applying on-premises hardware ensures that local teams have control over what kind and how many servers are set up, how they’re used, etc. That decision also comes with loads of increased responsibilities for maintenance, high up-front costs, and limitations on the scalability of the system. Hosted solutions take those responsibilities and headaches away, while giving them flexibility in resource use, scalability, and perpetual service improvement.

The “where it resides” dimension

In this article, we’re going to be considering that same on-premises / deployed decision as it applies to actuarial modeling and the structure of the actuarial organization. We’re not the first to write about this topic. It’s been in discussion within the actuarial world for at least twenty years. And has become more visible lately as companies and regulators responded to the 2008-2009 financial crisis. The COVID-19 global pandemic is only going to accelerate pushes for improvements in operations across the board, which means actuaries will be challenged to make all their work output better, however they can do that.

Setting up appropriate distribution of responsibilities, then, is one area where the questions have big implications and the path forward is not so clear. Actuaries considering how much of their work to centralize (or not) will want to read this article about the pros and cons of centralizing and decentralizing operations. As well, we offer for your consideration a third way of allocating work that has recently become an interesting alternative.

Who has the responsibility, anyway?

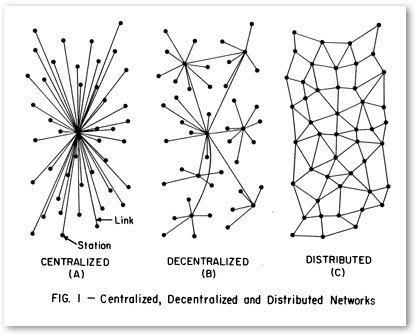

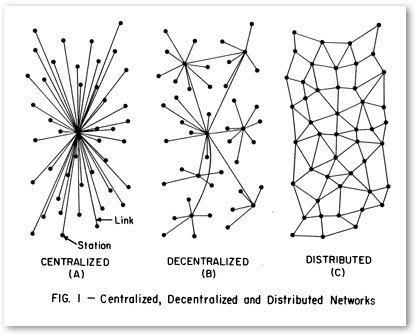

The question of where responsibilities reside for actuarial work is, not surprisingly, analogous to the question of on-premises or hosted solutions. Here’s how the traditional options would look like for actuarial modeling:

| Structure | Defining Characteristics |

|---|---|

| Decentralized | Each functional business unit maintains its own models, governance procedures, and maintenance. This is akin to on-premises deployment of servers. Models and responsibilities are fully integrated into each business unit. Those business units generally don’t talk to each other, however, leading to the term “silo” that describes vertical integration in the hierarchy with little cross-team communication. Analogous to on-premises setup where every end-user is responsible for their own maintenance and productivity. |

| Centralized | Modeling functionality will be consolidated into a single group within the company. Model development and maintenance are performed for all various models within that single group. Changes (such as new features, riders, or optionality) may be requested by the business units. Ultimately, those business units are consumers of model output, rather than owners. Analogous to hosted solutions, where end-users access a consolidated unit which can take advantage of economies of scale and deep expertise. |

In practice, companies may only decide to centralize certain portions of the actuarial portfolio (such as the modeling component), while the remainder of the decisions (such as rate-setting, prioritization, expense management, and raw analysis) stay within the functional units.

The pros and cons of decentralized modeling

Pros:

Individual business units can build deep expertise along the policy life cycle (research / experience / pricing / valuation / re-experience). This expertise can be reflected in the models built and deployed for these functions. You may be able to shorten turnaround time for questions about the models or their uses, because you’ve removed multiple layers of approvals and workflows.

As well, standards of practice and model use can match the use case more specifically. For example, it doesn’t really make sense to manage an option budget for a block of term life business without those options. Including those kinds of features to the term life model (because the UL model needs it) It adds additional work and potential confusion to the process.

Cons:

With more diverging groups comes a need to manage ever-diverging standards. In addition, there’s a tendency to see model drift between units as they have less incentive to keep models consistent.

There is even a chance of having completely different systems in place. Maybe one unit uses internally-developed Excel models, while another employs modern actuarial software for their calculations. Some may argue this is another pro, in that the model can be tailored to the use case. That can certainly happen, yet it also leads to friction during model results roll-ups and challenges of ensuring process consistency across the various arms of an enterprise.

The pros and cons of centralized modeling

Pros:

When everything is brought together, there will of necessity be efficiencies of scale. Certain modeling professionals can develop high-level skills at setting up models, performing checks and balances, and trusting their peers during reviews.

Additionally, this consolidation will lead to consistency in modeling practice, governance, and maintenance. Managing to one set of standards is much easier than managing to many.

Cons:

When these model functions are separated from the business units that use the results, it can lead to a feeling of distance and distrust as the end-users have to give up their ability to fully vet individual components of the model. It can also lead to increased turnaround time as there are more steps in between question and answer.

Finally, this may also lead to increased competition for scarce centralized resources in the modeling units, as prioritizations now have more variables to include.

Making the choice is not easy

Centralization of the actuarial modeling function may be in vogue these days. But, like any decision for which options exist, there’s no one-size-fits-all. Centralization will be right for some teams, and not others. Some who have centralized may soon wish to redistribute after seeing the effects, and vice versa.

The decision relates to how you wish to work. Here are some questions to ask as you make your selection.

How big is the team?

For small teams, it can seem like everyone is intimately involved / invested in everything. So it’s going to feel like multiple, centralized, overlapping teams working on everything all the time anyway. Which naturally tends towards decentralized assignment of responsibilities.

For larger groups, you’ll automatically start developing unique skill sets and separating the types of work. Because there’s finally enough pricing or analysis work to keep one professional busy for the whole time.

The larger the team, the more likely you are to develop specialization of tasks and, therefore, be able to reap the benefits of economies of scale that come with centralization.

Who do you trust to make decisions?

If you trust the individual unit actuaries to follow the model governance framework, then you can set appropriate standards and let those actuaries do good work. If you don’t trust them (perhaps because of lack of exposure to the products, or just generally less experience on the team), then you may lean towards consolidating that functionality again until the team has matured.

How fast do you want to go?

This also relates to the next dimension we’re going to look at in this series, whether you build it all at once and release it (waterfall) or deploy changes incrementally (agile). Waterfall processes tend to favor centralized setups. Agile processes lean towards decentralized (or distributed – see below) teams.

A desire for smaller, more frequent updates tends to lean towards decentralization, as units can push out their own changes as frequently as they wish, without waiting for other groups.

Which objectives are you considering?

Would there be different structures for different objectives or functions? Research and global economic analytics, for example, might be a consolidated function whereas compliance & filing might be distributed because of the nature of the work involved. A multinational organization might have both in place because it needs both the wide-reaching economic forecast as well as specific practices in individual jurisdictions.

Are you willing to accept some risk that’s appropriately compensated by a higher return?

Actuaries are often challenged to manage risk. Sometimes that gets conflated into minimizing risk. With decisions like this, one of the two options (or some point on the spectrum) might be the place where that risk is minimized. However, comprehensive risk management also factors in possible upsides of those risks as well, and combines the risks with the returns to determine the true potential of the decision. You may be able to successfully advocate for a position away from the risk-minimal structure because the rewards are better than the alternatives. If so, you’ll be implementing high-level actuarial expertise for the benefit of the whole organization.

It’s not an either/or decision

Consider this – there are more options than just centralized or decentralized.

There’s also a third model – the distributed work model.

In this setup, nodes (points of actuarial responsibility) are connected to a few others, rather than just one, leading to more options for paths of connection between them.

The current application outside of actuarial is the whole universe of blockchain applications. Bitcoin is an example. In this setup, all of the various nodes are doing a little bit of the work all the time. This allows for maximization of efficiency and minimization of downtime, as any node which is free can be assigned a bit of the calculation. After those nodes do their work, a consolidator brings it all back together again, and the interconnection means that other parts are always viewing who’s doing what and taking notes.

A distributed actuarial setup might mean that you assign work responsibilities for primary work to various units, but have peer reviews, assumption reviews, documentation, and sign-off distributed across various elements. For example, the UL Pricing team could have their work peer-reviewed by the Annuity Pricing team once, the UL Valuation team another time, and the Term Life forecasting team the next. That way you are constantly churning who is seeing what, who is doing what, and how. You’re constantly balancing workloads across the whole company. And you’re creating robust backups for who can do what and who knows what, so that if one node gets knocked out, the work still goes on with minimal disruption to the rest.

You’re creating redundancies in the skill sets of the actuaries performing this work. Should one of them be promoted or leave the company, you’re not also losing valuable intellectual capital about how the systems work, how the products interact, etc.

Exploration of this idea would take another whole article. For now, suffice it to say that this is an alternative that should be considered a viable option. It certainly has become popular, as evidenced by the growth in various peer-to-peer networks and self-validating systems outside of the actuarial world.

Why does it matter?

Why does the organization of the modeling function within an actuarial organization matter?

Because that decision affects what kind of actuarial software you use in that work. If you’re opting for decentralization, you still want to maintain control, with a recollection (integration) at regular intervals to make a company model, validation, etc. You don’t want to have parallel development that potentially diverges or creates unnecessary redundancies.

With centralization, you run the risks of slowing down the process. However, could you also use the economies of scale and efficiencies of specialization that come with centralization to create more regular updates (i.e. “actuarial work as a service”) and smaller, more digestible output changes?

That way you’re not waiting 3 years for, say, a volume of 50x updates. Instead, you’re getting 3x unit updates every 3 months. After 3 years, then, you’re at 36 units of growth, which might feel like you’re not quite where you would have been otherwise.

However, consider the intermediate time frame: you’ve been continuously advancing your actuarial practice all along, so your deliverables from the middle of year 1 through the end of year 3 are all better than the baseline.

Similarly, is your actuarial software continuously rolling out updates? Or does it feel like it’s out of date as soon as you install it, because the versions only update every 18 months or so? In the same way as your actuarial practice may be advancing, your actuarial software will need to keep pushing the boundaries as well.

If it’s not, perhaps it’s time to take another look. If your software is holding you back because it’s limiting how you really want to organize your actuarial functions, perhaps it’s time to evaluate your system again. You just might find that you have an opportunity to unleash your actuaries to do much better work than you thought possible.

The actuarial department of the future does not look like the actuarial department of the past

Recent developments in technology and thought leadership in the actuarial world have inspired many companies to consider centralizing their actuarial modeling function. The decision is analogous to deciding whether to deploy software on-premises or using a hosted solution (i.e. decentralized or centralized actuarial modeling teams). Actuaries will do well to consider how they want to work and what software they will use as they set their structures for future success.

Next time:

How it’s made: Agile vs. waterfall development